GPUs and Accelerators at the CHPC

The CHPC provides a range of compute nodes equipped with GPUs. These GPU-enabled devices are available on the granite, notchpeak, kingspeak, lonepeak, and redwood clusters (the latter being part of the Protected Environment). This document outlines the hardware specifications, access procedures, and usage guidelines for these resources.

GPU Hardware Overview

The CHPC provides GPU devices on the granite (grn), notchpeak (np), kingspeak (kp), lonepeak (lp), and redwood (rw) clusters. Please note that the redwood cluster is part of the Protected Environment (PE).

In Table 1, we provide a summary of the different types of GPU devices (NVIDIA) , i.e.:

- Architecture: the GPU generation or architecture to which a device belongs.

- Device: the model or name of the GPU.

- Compute Capability: defines the hardware features and supported instructions of the device.

- Global Memory: the amount of global memory available on the GPU.

- Area: recommended application area for the GPU.

- AI : indicates whether the GPU efficiently supports AI workloads.

- FP64: indicates whether the GPU supports native FP64 floating-point operations.

- Gres Type: The string to be specified with the SLURM

--gresoption to request this GPU type. - Cluster: The cluster(s) where this GPU type is available

| Architecture | Device | Compute Capability |

Global Memory |

Area | Gres Type |

Cluster |

|---|---|---|---|---|---|---|

| Hopper | H200 | 9.0 | 141 GB | AI, FP64 | h200 h200_1g.18gb h200_2g.35gb h200_3g.71gb |

grn, rw |

| H100 NVL | 9.0 | 96 GB | AI, FP64 | h100nvl h100 |

grn, np rw |

|

| Ada Lovelace | L40 S | 8.9 | 48 GB | AI | l40s | grn, np |

| L40 | 8.9 | 48 GB | AI | l40 | np | |

| L4 Tensor Core | 8.9 | 24 GB | AI | l4 | grn | |

| RTX 6000 Ada | 8.9 | 48 GB | AI | rtx6000 | grn, np, rw | |

| RTX 5000 Ada | 8.9 | 32 GB | AI | rtx5000 | grn, rw | |

| RTX 4500 Ada | 8.9 | 24 GB | AI | rtx4500 | grn | |

| RTX 2000 Ada | 8.9 | 16 GB | AI | rtx2000 | grn | |

| Ampere | A100-SXM4-80GB | 8.0 | 80 GB | AI, FP64 | a100 | np |

| A100-PCIE-80GB | 8.0 | 80 GB | AI, FP64 | a100 | np, rw | |

| A100-SXM4-40GB | 8.0 | 40 GB | AI, FP64 | a100 | rw | |

| A100-PCIE-40GB | 8.0 | 40 GB | AI, FP64 | a100 | np | |

| A30 | 8.0 | 24 GB | AI, FP64 | a30 | rw | |

| A800 40GB Active | 8.0 | 40 GB | AI, FP64 | a800 | grn, np | |

| RTX A6000 | 8.6 | 48 GB | AI | a6000 | np | |

| A40 | 8.6 | 48 GB | AI | a40 | np, rw | |

| GeForce RTX 3090 | 8.6 | 24 GB | AI | 3090 | np | |

| RTX A5500 | 8.6 | 24 GB | AI | a5500 | np | |

| Turing | GeForce RTX 2080 Ti | 7.5 | 11 GB | AI | 2080ti | np |

| Tesla T4 | 7.5 | 16 GB | AI | t4 | np | |

| Volta | V100-PCIE-16GB | 7.0 | 16 GB | AI, FP64 | v100 | np |

| TITAN V | 7.0 | 12 GB | AI, FP64 | titanv | np | |

| Pascal | Tesla P100-PCIE-16GB | 6.0 | 16 GB | AI, FP64 | p100 | kp |

| Tesla P40 | 6.1 | 24 GB | AI | p40 | np | |

| GeForce GTX 1080 Ti | 6.1 | 11 GB | AI | 1080ti | lp, rw | |

| Maxwell | GeForce GTX Titan X | 5.2 | 12 GB | AI | titanx | kp |

A detailed description of the features of each GPU type can be found here.

Getting access to CHPC's GPUs

- To access CHPC's GPU resources, you must first have an active (i.e. non-locked) CHPC account. Information on how to obtain a CHPC account can be found here.

- We outline below the procedures for accessing GPUs on various CHPC clusters:

- General nodes (i.e. nodes purchased by the CHPC) on notchpeak, kingspeak, lonepeak and redwood clusters.

All users have free access to these nodes. - General nodes on the granite cluster: an allocation is required to use these GPUs.

You can apply for an allocation here. If your allocation is exhausted, you can still run jobs in freecycle mode, but they may be subject to preemption. - Owner nodes on granite, notchpeak, kingspeak, lonepeak and redwoodclusters.

- All users have guest access to the GPUs on owner nodes. You may use them when idle, but jobs may be preempted.

- For dedicated access (the owner mode)

- If you belong to the owner's group, access to the owner nodes is granted by default.

- If you are from another groupand collaborating with the PI who owns the node, the PI must email helpdesk@chpc.utah.edu to grant permission for you to be added to the owner's partition.

- One-U Responsible AI (RAI) initiative Owner Nodes. Any University of Utah researcher with an AI-related project may request owner mode access by emailing helpdesk@chpc.utah.edu.

- General nodes (i.e. nodes purchased by the CHPC) on notchpeak, kingspeak, lonepeak and redwood clusters.

The notchpeak cluster also has a special partition with a few modest GPUs (notchpeak-shared-short) for jobs with a small footprint (max. of 8 cores for a maximum walltime of 8 hours).

Anyone with an active CHPC account has access to this partition. This partition has

neither allocation nor preemption. The redwood cluster has a similar special partition,

including GPUs (redwood-shared-short).

Running SLURM jobs using GPUs.

Access to GPU-enabled nodes is managed through SLURM job scheduling. You can access these nodes in three ways:

- by submitting a SLURM batch script using sbatch

- interactively using salloc

- by using the CHPC's Open OnDemand web portal

The former two methods are described in detail in our SLURM documentation page. However, using GPUs requires specific SLURM options, and some standard options must be configured differently:

- The

--account,--partitionoptions must be set to GPU-specific values. When using GPUs

on the granite cluster, the--qosoption is mandatory. - The

--gresoption is mandatory to request GPU resources. - The

--memoption is optional, depending on your job's memory requirements.

In the following sections, we will discuss these three key options—--account, --partition, and --gres—in more detail.

1. The account, partition [,and qos] SLURM options

Access to GPU nodes is determined by the SLURM options available to your account. Based

on your granted access, you will be able to use specific combinations of SLURM --partition, --account, and optionally --qos options on the clusters.

The command mychpc batch displays all valid combinations of --account, --partition, and --qos (for both CPU and GPU jobs) that you are currently authorized to use.

Note: Valid combinations must appear on the same line in the output.

In addition to showing valid combinations, mychpc batch also displays the non-occupancy (i.e., idleness) of each partition.

Example output:

[u0253283@notchpeak2:~]$ mychpc batch | grep gpu

GPU --partition=rai-gpu-grn --qos=rai-gpu-grn --account=rai [81% idle]

GPU --partition=soc-gpu-kp --qos=soc-gpu-kp --account=soc-gpu-kp [25% idle]

GPU --partition=granite-gpu --qos=granite-gpu --account=chpc [0% idle]

GPU --partition=kingspeak-gpu --qos=kingspeak-gpu --account=kingspeak-gpu [94% idle]

GPU --partition=lonepeak-gpu --qos=lonepeak-gpu --account=lonepeak-gpu [25% idle]

GPU --partition=notchpeak-gpu --qos=notchpeak-gpu --account=notchpeak-gpu [46% idle]

GPU --partition=granite-gpu --qos=granite-gpu-freecycle --account=cs6230 [0% idle]

GPU --partition=granite-gpu --qos=granite-gpu-freecycle --account=rai [0% idle]

GPU --partition=granite-gpu-guest --qos=granite-gpu-guest --account=chpc [43% idle]

GPU --partition=granite-gpu-guest --qos=granite-gpu-guest --account=cs6230 [43% idle]

GPU --partition=kingspeak-gpu-guest --qos=kingspeak-gpu-guest --account=owner-gpu-guest [25% idle]

GPU --partition=notchpeak-gpu-guest --qos=notchpeak-gpu-guest --account=owner-gpu-guest [69% idle]

Partition Naming Conventions

Previously, we described the distinction between general nodes (purchased by CHPC) and owner nodes (purchased by individual PIs). This distinction is reflected in the naming of the --partition, --account, and --qos flags.

- General Nodes

- notchpeak, kingspeak, lonepeak, redwood : no allocation required

--partition=$(CLUSTERNAME)-gpu--account=$(CLUSTERNAME)-gpu--qos=$(CLUSTERNAME)-gpu# optional

Replace $(CLUSTERNAME) with the cluster name (e.g., notchpeak).

- granite

- with allocation

--partition=granite-gpu--account=$(OWNER)--qos=granite-gpu# mandatory

Replace $(OWNER) with the group name.

- freecycle mode (jobs may be preempted)

Even if you have an active allocation, you may use freecycle mode. However, we recommend using your allocation first.--partition=granite-gpu--account=$(OWNER)--qos=granite-gpu-freecycle# mandatory

Replace $(OWNER) with the group name.

- with allocation

- notchpeak, kingspeak, lonepeak, redwood : no allocation required

- Owner Nodes

- notchpeak, kingspeak, lonepeak, redwood clusters

- Owner mode (for users whose PI owns the node)

--partition=$(OWNER)-gpu-$(SUF)--account=$(OWNER)-gpu-$(SUF)--qos=$(OWNER)-gpu-$(SUF)# optional

where:- $(OWN) = group name

- $(SUF) = cluster suffix: np, kp, lp or rw

- Guest Mode (access to all owner nodes)

--partition=$(CLUSTERNAME)-gpu-guest--account=owner-gpu-guest--qos=$(CLUSTERNAME)-gpu-guest# optional

Replace $(CLUSTERNAME) with the cluster name.

- Owner mode (for users whose PI owns the node)

- granite

- Owner Mode

--partition=$(OWNER)-gpu-grn--account=$(OWNER)--qos=$(OWNER)-gpu-grn# mandatory

Replace $(OWNER) by the group name

- Guest Mode

--partition=granite-gpu-guest--account=$(OWNER)--qos=granite-gpu-guest# mandatory

Replace $(OWNER) by the group name

- Owner Mode

- notchpeak, kingspeak, lonepeak, redwood clusters

Additional Notes

- SLURM options have short forms:

--partition=→-p--account=→-A--qos=→-q

-

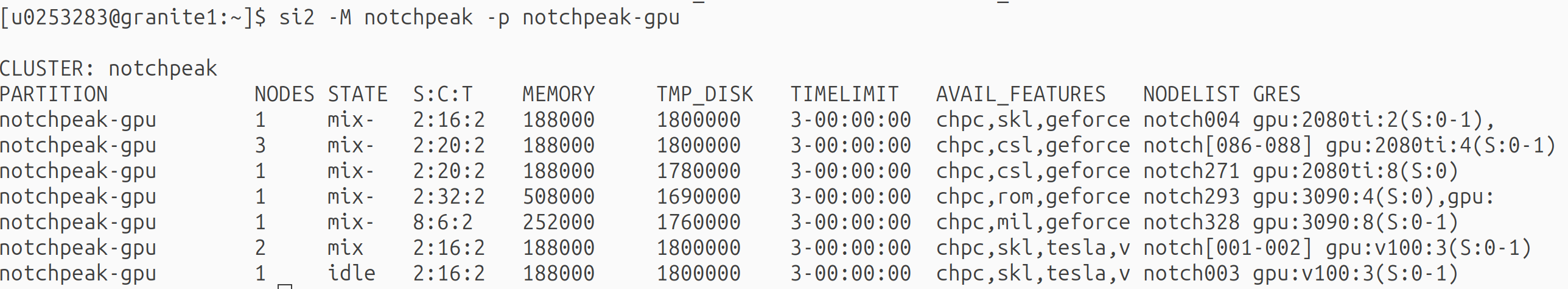

To view available resources in a partition:

si2 [-M $(CLUSTER)] --partition=$(PARTITION)or using short form:

si2 [-M $(CLUSTER)] -p $(PARTITION)The

-M $(CLUSTER)flag is optional and allows you to query partitions on a different cluster than the one you're currently on. Thesi2andsicommands are convenient aliases provided by CHPC. More information about them can be found here.

2.The --gres SLURM option

To use GPU devices, you must first have access to a SLURM partition that includes GPUs (as outlined previously). Additionally, you must explicitly request GPU resources in your SLURM job using the --gres option, which follows this syntax:

--gres=gpu[:<gres_type>]:<num_devices>

where:

-

gpu(mandatory): Specifies that GPU resources are being requested. -

<gres_type>(optional): Specifies the type of GPU you want to use.- If provided, it must match a

gres_typeavailable in the selected partition. - You can check available

gres_typevalues in a partition using:si2 -p <PARTITION> - If not specified, SLURM will allocate any available GPU in the partition.

- If provided, it must match a

-

<num_devices>(optional): The number of GPU devices to request.- If omitted, SLURM will allocate one GPU by default.

- The maximum number of devices (including MIGs -- see below) you can request is limited to the number available on a single node.

Available gres_type values

All available gres_type values on CHPC clusters are listed in the gres_type column of Table 1. Typically, each GPU device type has a corresponding gres_type, with the following exceptions:

- H100 GPUs

h100nvl: Used on the granite (grn) clusterh100: Used on the redwood (rw) cluster

- H200 GPUs

h200: Refers to full physical H200 GPU devices

- MIG (Multi-Instance GPU support)

NVIDIA's MIG technology allows a single physical GPU to be partitioned into multiple isolated instances. Each MIG has its own memory, cache, and compute cores. A single GPU can support up to 7 MIGs.

MIG configurations available on notchpeak and redwood clusters include:

-

h200_1g.18gb:- 18 GB global memory per MIG

- Each node has 8 GPUs × 7 MIGs = 56 MIGs per node

-

h200_2g.35gb:- 35 GB global memory per MIG

- Each node has 2 MIGs per GPU × 8 GPUs = 16 MIGs per node

-

h200_3g.71gb:- 71 GB global memory per MIG

- Each node has 1 MIG per GPU × 8 GPUs = 8 MIGs per node

-

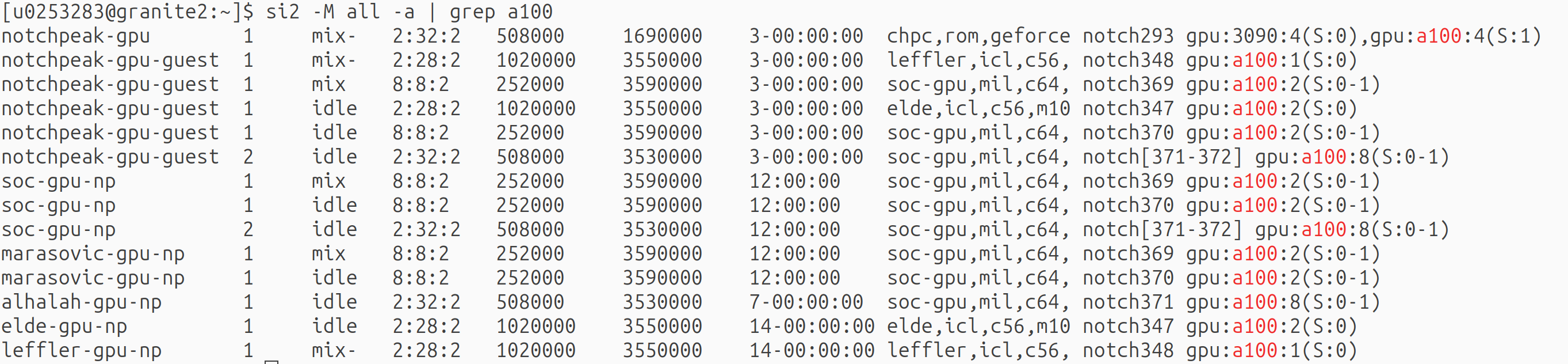

Cluster-Wide GPU Overview

To view all partitions and their associated features and gres_type values across all CHPC clusters (including both the general and protected environments),

use:

si2 -M all -a

This command provides a comprehensive overview of GPU availability and configuration.

3.The --mem Slurm option

Node Sharing and GPU Job Performance

Most GPU jobs do not utilize all the GPUs available on a node. As a result, these jobs often share the node’s CPU resources—including CPU cores, memory, and I/O—with other jobs. In some cases, even GPU-related resources (such as data transfer bandwidth between CPU and GPU) may be shared.

Because of this, the performance of your job can be affected by other jobs running on the same node. If you are conducting benchmarking or performance-sensitive tasks, it is recommended to request exclusive access to the entire node, even if your job does not require all of its resources.

How Node Sharing Works

Node sharing occurs when you request fewer than the total number of GPUs, CPU cores, and/or memory available on a node. You can share a node based on:

- Number of GPUs

- Number of CPU cores

- Amount of memory

- Or any combination of the above

By default, each job is allocated 2 GB of memory per requested core, which is the lowest common denominator across CHPC clusters. If you need more memory,

use the --mem option to specify the desired amount.

To request exclusive use of the node, set:

--mem=0

Note:

Open OnDemandsimplifies the user experience. Once a user selects a combination of account, partition, and QoS, the list of available GPUs associated with that selection is automatically populated. The amount of CPU memory can still be adjusted as needed.

Examples

Sbatch Jobs

Example 1

The following script includes:

- Resource requests for 1 node, 6 tasks, and 40 GB of CPU memory.

- GPU allocation using

--gres=gpu:rtx6000:1. - Email notifications for all job events.

- Environment setup for deep learning.

- GPU detection and validation using

nvidia-smi. - Execution of a Python script (

mycode.py) from a specified working directory. - Logging of job metadata and runtime environment.

#!/bin/bash

#SBATCH --time=01:00:00

#SBATCH --nodes=1

#SBATCH --ntasks=6

#SBATCH --mem=40G

#SBATCH --account=owner-gpu-guest

#SBATCH --partition=notchpeak-gpu-guest

#SBATCH --gres=gpu:rtx6000:1

#SBATCH --mail-type=ALL

#SBATCH --mail-user= <---- Here comes your email

#SBATCH --job=name=Pytorch-Example

module load deeplearning

export WORKDIR=$HOME/TestBench/Torch

NVIDIA_CHECK=$(nvidia-smi -L)

# Job Info

printf "Job %s started on %s\n" "$SLURM_JOBID" "$(date)"

printf " #Nodes:%s\n" "$SLURM_NNODES"

printf " #Tasks:%s\n" "$SLURM_NTASKS"

printf " Hostname:%s\n" "$(hostname)"

# Check whether a GPU has been detected

if [[ -z "$NVIDIA_CHECK" ]]; then

printf " ERROR:No GPU has been detected!\n"

printf " Goodbye!\n"

exit

else

printf "\n %d GPUs were detected!\n" "$(echo $NVIDIA_CHECK | wc -l )"

printf "%s\n" "$(nvidia-smi -L)"

printf " CUDA_VISIBLE_DEVICES:%s\n\n" "$(echo $CUDA_VISIBLE_DEVICES)"

fi

# Perform the work (and monitor it)

cd $WORKDIR

printf " pwd:%s\n" "$(pwd)"

printf " python:%s\n" "$(which python)"

/uufs/chpc.utah.edu/sys/installdir/chpcscripts/gpu/gpu-monitor.sh &

python mycode.py > $SLURM_JOBID.out 2>&1

printf "Job %s ended on %s\n" "$SLURM_JOBID" "$(date)"

Example 2

The job is configured to use 8 NVIDIA 3090 GPUs on a single node (using all its memory), assigning one GPU to each of the 8 MPI tasks.

#SBATCH --nodes=1

#SBATCH --ntasks=8

#SBATCH --mem=0

#SBATCH --partition=notchpeak-gpu

#SBATCH --account=notchpeak-gpu

#SBATCH --gres=gpu:3090:8

#SBATCH --time=1:00:00

... prepare scratch directory, etc

mpirun -np $SLURM_NTASKS myprogram.exe

Interactive Jobs

- The following command requests interactive access on the notchpeak-gpu partition using

two 3090 GPUs. The CPU memory allocated is 2 GB per task. If you need more memory,

add the

--memflag.

salloc --ntasks=2 --nodes=1 --time=01:00:00 --partition=notchpeak-gpu --account=notchpeak-gpu

--gres=gpu:3090:2- The following command requests one entire H200 device in the granite guest partition, with 40GB of CPU memory. Replace $GROUP with the name of the group you belong to.

salloc -N 1 -n 12 -A $GROUP -p granite-gpu-guest --qos=granite-gpu-guest --gres=gpu:h200:1

-t 1:00:00 --mem=40G- The following command requests one MIG instance (the smallest configuration with 18 GB of global memory) from the rai-gpu-grn partition. Note that 24 GB memory is allocated on the CPU.

salloc -N 1 -n 12 -A rai -p rai-gpu-grn --qos=rai-gpu-grn --gres=gpu:h200_1g.18gb:1

-t 1:00:00 --mem=24GBest Practices

1.How to select the right GPU

CHPC clusters provide a wide variety of GPU devices. Depending on the nature of your research problem, certain GPU types may be better suited to your needs. The following questions can help guide your selection:

- Does your code require double-precision (FP64) operations?

If so, choose a GPU that offers native FP64 support. These GPUS are labeled 'FP64' in Table 1. - How much Global Memory does your application require?

The required memory depends on the size of your input/output data and the memory demands of your GPU kernels. For deep learning models, memory needs are also influenced by the number of parameters, batch size, and numerical precision (e.g., FP32 vs. FP16). - Does your application benefit from Tensor Cores?

If yes, have a look at the hardware page to identify GPUs that support Tensor Core acceleration. - Does your application require a specific GPU generation or compute capability?

Some applications are only compatible with newer GPU architectures. The compute capability of each GPU type is listed in Table 1.

Based on your requirements, you can target specific GPU types using the appropriate --gres string (see (Table 1 for available values). Additionally, the si or si2 commands provide useful information about:

- Available GPU types (

gres_type) - The nodes on which those GPUs are located

2.Which GPUs are currently idle?

To check which GPUs are currenlty idle on each partition, use the freegpus command. Note that an idle GPU does not necessarily mean it is available for use—for

example, the node's CPU memory might be fully utilized by other jobs. By default,

this command scans all the clusters and partitions. For a full list of options, run:

freegpus --help

For example, to list idle GPUs on the notchpeak-gpu partition, run:

[u0253283@notchpeak1:~]$ freegpus -p notchpeak-gpu

SUMMARY: notchpeak-gpu

3090 (x3)

a100 (x1)

The output displays the GPU type, followed by the number of available GPUs of that type in parentheses after the letter x.

3.How to verify that the GPU(s) is/are detected

NVIDIA provides the NVIDIA System Management Interface program (nvidia-smi), a powerful command-line tool built on the NVIDIA's NVML library. It offers a wide range of options for monitoring and managing GPU resources. Some

commonly used commands include:

nvidia-smi -L

Lists all NVIDIA GPUs in the SLURM job/on the system along with their UUIDs (hardware identifiers).nvidia-smi -q

Displays detailed information about each GPU, including usage, temperature, and memory.nvidia-smi --query-gpu=name,memory.total,memory.used,utilization.gpu --format=csv

Outputs the GPU name, total memory, memory usage, and utilization percentage in CSV format.

Another way to verify whether GPUs have been allocated to your job is by inspecting the environmental variableCUDA_VISIBLE_DEVICES. This variable lists the local IDs of GPUs assigned to your job. If it is empty,

no GPUs have been allocated.

Example 1: No GPUs Allocated

In the following example, a job is launched on the kingspeak-gpu partition without the --gres option. As a result, no GPUs are allocated:

[u0253283@kingspeak2:~]$ salloc --nodes=1 --ntasks=4 --mem=20G --account=kingspeak-gpu

--partition=kingspeak-gpu

salloc: Granted job allocation 14231524

salloc: Waiting for resource configuration

salloc: Nodes kp297 are ready for job

[u0253283@kp297:~]$ nvidia-smi -L

No devices found.

[u0253283@kp297:~]$ env | grep CUDA_VISIBLE_DEVICES

[u0253283@kp297:~]$

Example 2: GPUs Allocated

In this example, the same command is used, but with the --gres option requesting 4 Titan X GPUs:

[u0253283@kingspeak1:~]$ salloc --nodes=1 --ntasks=4 --mem=20G --account=kingspeak-gpu

--partition=kingspeak-gpu --gres=gpu:titanx:4

salloc: Granted job allocation 14231517

salloc: Waiting for resource configuration

salloc: Nodes kp297 are ready for job

[u0253283@kp297:~]$ env | grep CUDA_VISIBLE_DEVICES

CUDA_VISIBLE_DEVICES=0,1,2,3

[u0253283@kp297:~]$ nvidia-smi -L

GPU 0: NVIDIA GeForce GTX TITAN X (UUID: GPU-cd731d6a-ee18-f902-17ff-1477cc59fc15)

GPU 1: NVIDIA GeForce GTX TITAN X (UUID: GPU-77610a0f-57a2-75ec-99ae-0c52bd63f74f)

GPU 2: NVIDIA GeForce GTX TITAN X (UUID: GPU-765a4c3d-f5ac-084f-c2b2-4c00d25f2797)

GPU 3: NVIDIA GeForce GTX TITAN X (UUID: GPU-2ec75e81-a267-69d8-6107-435288f6502b)

The non-empty CUDA_VISIBLE_DEVICES output and the nvidia-smi -L listing confirm that GPUs have been successfully allocated.

Note: In addition to CUDA_VISIBLE_DEVICES, there are other CUDA-related environment variables that may be relevant. For a complete

list, please have a look here.

4.How to Monitor Multiple GPU jobs on a Shared Node

Each GPU job runs within its own cgroup. When a user has multiple GPU jobs running on the same node, logging into that node via SSH will place the user inside the cgroup of one of those jobs. As a result, tools like nvidia-smi only shows information for that specific job, making it impossible to monitor the

others.

To check the status of a different job, use the following command:

srun --pty --overlap --jobid $JOBID /usr/bin/nvidia-smi

Replace $JOBID with the job ID of the job you want to monitor.

5.How to Monitor GPU Performance within your SLURM job

before launching your program executable:

/uufs/chpc.utah.edu/sys/installdir/chpcscripts/gpu/gpu-monitor.sh &

$SLURM_JOBID.gpulog, where GPU utilization data is recorded every 5 minutes.GPU Programming Environment and Performance

NVIDIA CUDA Toolkit

The NVIDIA CUDA Toolkit includes both the CUDA drivers and the CUDA Development Environment. Key components of the development environment include:

- CUDA C++ Core Compute Libraries (e.g.,

Thrust, libcu++) - CUDA Runtime Library (

cudart) - CUDA Debugger (

cuda-gdb) - CUDA C/C++ Compiler (

nvcc) - CUDA Profiler (

nvprof) - NVIDIA Nsight

- CUDA Math Libraries, such as:

cuBLAScuFFTcuRANDcuSOLVERcuSPARSE

To explicitly load a specific version of the CUDA development environment, use the following command:

module load cuda/<version>

To view all available CUDA versions, run:

module spider cuda

Compiling with nvcc

The standard NVIDIA C/C++ compiler is called nvcc.

As shown earlier (see Table 1), we listed all CHPC GPU devices along with their architecture and generation. When

compiling CUDA code, it’s important to target the appropriate GPU architectures. Use

the following nvcc compiler flags to support all the current GPU architectures at CHPC:

-gencode arch=compute_52,code=sm_52 \

-gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 \-gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 \-gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=sm_86 \.

-gencode arch=compute_89,code=sm_89 -gencode arch=compute_90,code=sm_90

For more info on the CUDA compilation and linking flags, please have a look at CUDA C++ Programming Guide.

Note: The Maxwell, Pascal, and Volta architectures are now feature-complete. While CUDA 12.x still supports building applications for these architectures, offline compilation and library support will be removed in the next major CUDA Toolkit release.

NVIDIA HPC Software Development Kit (SDK)

We offer the NVIDIA HPC SDK, a comprehensive toolkit for developing high-performance computing (HPC) applications that run on both CPUs and GPUs, supporting standard programming models like Fortran, OpenACC, OpenMP, and MPI.

The NVIDIA HPC SDK can be accessed by running:

module load nvhpc

Compiling using nvc, nvc++, nvfortran

The legacy PGI compilers — pgcc (C), pgc++ (C++), and pgf90 (Fortran) — have evolved into nvc, nvc++, and nvfortran, respectively.nvc is a C compiler for NVIDIA GPUs as well as AMD and Intel CPUs. The nvc C11 compiler supports GPU parallel programming with OpenACC and multicore CPU programming using both OpenACC and OpenMP.

Its C++ counterpart, nvc++, supports C++17. The Fortran equivalent, nvfortran, supports ISO Fortran 2003 and many features of Fortran 2008.

To compile .cu (CUDA) code with nvc and target specific compute capabilities, you

can use the -gpu flag followed by all desired compute capabilities you want to support. For example:

nvc -cuda -gpu=cc52,cc60,cc61,cc70,cc75,cc80,cc86,cc89,cc90 your_code.cu -o your_program

-cuda: Enables CUDA compilation mode.-fast: Enables aggressive optimizations.-Minfo=accel: Displays information about accelerator optimizations.-acc: Use this if you are mixing CUDA with OpenACC.

Libraries

Nvidia HPC SDK includes a suite of Math libraries for offloading computations to the GPUs and Communication librariesfor high-speed data exchange between multiple GPUs.The math libraries are located in the math_libs subdirectory, while the communication libraries can be found in the comm_libs subdirectory.

To compile and link with, for example, cuBLAS, use the following flags:

- Compilation line:

-I$NVROOT/math_libs/include - Linking line:

-L$NVROOT/math_libs/lib64 -Wl,-rpath=$NVROOT/math_libs/lib64 -lcublas

Debugging

The NVIDIA HPC SDK and CUDA distributions include a terminal-based debugger called cuda-gdb, which operates similarly to the GNU gdb debugger. For more information, see the cuda-gdb documentation.

To enable debugging information:

- For host (CPU) debugging:

nvc -g -o your_program your_code.c - For device (GPU) debugging:

nvc -cuda -g -G -o your_program your_code.cu

To detect out-of-bounds and misaligned memory access errors, use the cuda-memcheck tool. Detailed usage instructions can be found in the cuda-memcheck documentation.

We also license the DDT debugger, which supports CUDA and OpenACC debugging. Due to its user-friendly graphical interface, we recommend DDT for GPU debugging. For guidance on using DDT, please look at our debugging page.

Profiling

Profiling is a valuable technique for identifying GPU performance issues, such as inefficient

GPU utilization or suboptimal use of shared memory. NVIDIA CUDA provides a visual

profiler called Nsight Systems (nsight-sys), and command-line profiler (ncu).

Note:

We use the GPU hardware performance counters for GPU monitoring, which prevents profiling

by default. This issue typically manifests with the following error message:

$ ncu ./my-gpu-program

==ERROR== Profiling failed because a driver resource was unavailable. Ensure that no other tool (like DCGM) is concurrently collecting profiling data. See https://docs.nvidia.com/nsight-compute/ProfilingGuide/index.html#faq for more details.

To enable profiling, we included additional gres option, nsight=1, to the GPU request. For example:

salloc -N 1 -n 4 -A owner-gpu-guest -p notchpeak-gpu-guest -t 1:00:00 --gres=gpu:a40:1,nsight:1

In Open OnDemand interactive apps form, check the "Enable GPU profiling" checkbox which appears when a GPU type is chosen.